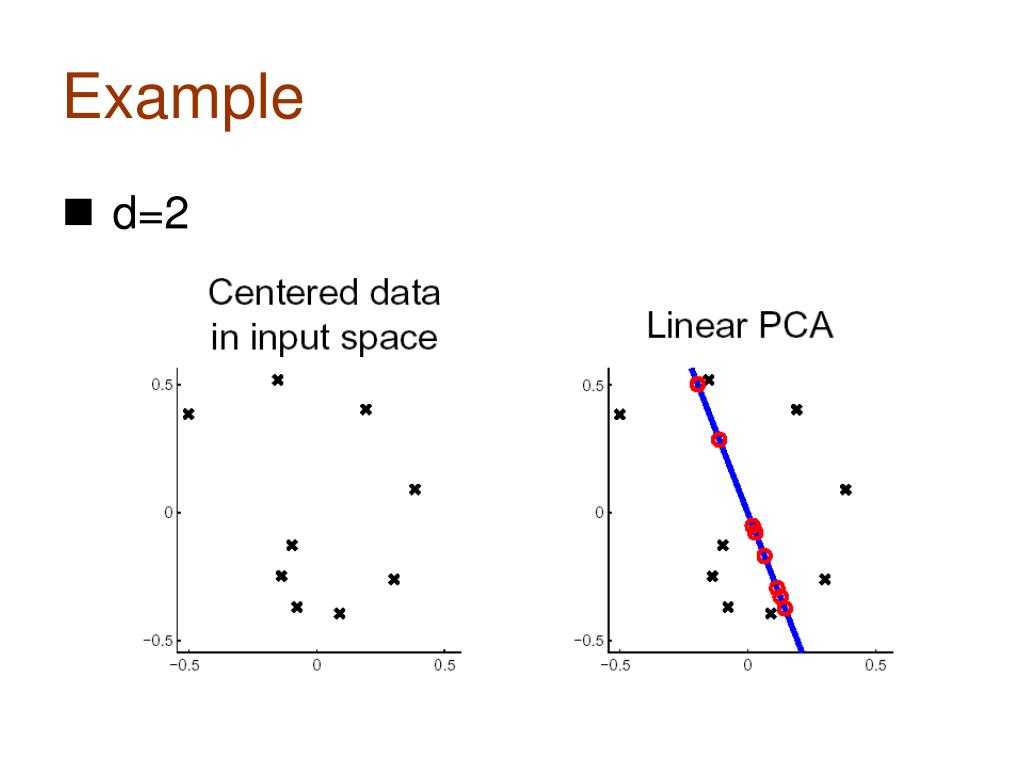

This is what essentially PCA does, it finds the best linear combinations of the original variables so that the variance or spread along the new variable is maximum. What if data doesn’t have a variable that segregates food items properly? We can create an artificial variable through a linear combination of original variables like Your job will be much harder if the chosen variable is almost in the same quantity in food items. Which variable will be a good choice to differentiate food items? If you choose a variable that varies a lot from one food item to another, you will be able to detach them properly. Suppose, you wish to distinguish between different food items based on their nutritional content. Let’s develop an intuitive understanding of PCA. In the below figure the data has maximum variance along the red line in two-dimensional space. “PCA works on a condition that while the data in a higher-dimensional space is mapped to data in a lower dimension space, the variance or spread of the data in the lower dimensional space should be maximum.” It is a projection based method that transforms the data by projecting it onto a set of orthogonal(perpendicular) axes. Principal Component Analysis(PCA) is one of the most popular linear dimension reduction algorithms. This article is focused on the design principals of PCA and its implementation in python. Generalized Discriminant Analysis (GDA).The various techniques used for dimensionality reduction include: The figure illustrates a 3-D feature space is split into two 1-D feature spaces, and later, if found to be correlated, the number of features can be reduced even further. This is called the “ Curse of Dimensionality”. While the performance of any machine learning model increases if we add additional features/dimensions, at some point a further insertion leads to performance degradation that is when the number of features is very large commensurate with the number of observations in your dataset, several linear algorithms strive hard to train efficient models. In machine learning, “dimensionality” simply refers to the number of features (i.e.

A large number of features in the dataset are one of the major factors that affect both the training time as well as the accuracy of machine learning models. However, there are still various portions that cause performance bottlenecks while developing such models. With the availability of high-performance CPUs and GPUs, it is pretty much possible to solve every regression, classification, clustering, and other related problems using machine learning and deep learning models.

0 kommentar(er)

0 kommentar(er)